This weblog breaks down the accessible pricing and deployment choices, and instruments that help scalable, cost-conscious AI deployments.

Once you’re constructing with AI, each choice counts—particularly relating to value. Whether or not you’re simply getting began or scaling enterprise-grade functions, the very last thing you need is unpredictable pricing or inflexible infrastructure slowing you down. Azure OpenAI is designed with that in thoughts: versatile sufficient for early experiments, highly effective sufficient for international deployments, and priced to match the way you truly use it.

From startups to the Fortune 500, greater than 60,000 prospects are selecting Azure AI Foundry, not only for entry to foundational and reasoning fashions—however as a result of it meets them the place they’re, with deployment choices and pricing fashions that align to actual enterprise wants. That is about extra than simply AI—it’s about making innovation sustainable, scalable, and accessible.

This weblog breaks down the accessible pricing and deployment choices, and instruments that help scalable, cost-conscious AI deployments.

Versatile pricing fashions that match your wants

Azure OpenAI helps three distinct pricing fashions designed to fulfill completely different workload profiles and enterprise necessities:

- Customary—For bursty or variable workloads the place you need to pay just for what you utilize.

- Provisioned—For prime-throughput, performance-sensitive functions that require constant throughput.

- Batch—For big-scale jobs that may be processed asynchronously at a reduced fee.

Every strategy is designed to scale with you—whether or not you’re validating a use case or deploying throughout enterprise items.

Customary

The Customary deployment mannequin is right for groups that need flexibility. You’re charged per API name primarily based on tokens consumed, which helps optimize budgets in periods of decrease utilization.

Greatest for: Improvement, prototyping, or manufacturing workloads with variable demand.

You may select between:

- World deployments: To make sure optimum latency throughout geographies.

- OpenAI Knowledge Zones: For extra flexibility and management over knowledge privateness and residency.

With all deployment alternatives, knowledge is saved at relaxation inside the Azure chosen area of your useful resource.

Batch

- The Batch mannequin is designed for high-efficiency, large-scale inference. Jobs are submitted and processed asynchronously, with responses returned inside 24 hours—at as much as 50% lower than World Customary pricing. Batch additionally options giant scale workload help to course of bulk requests with decrease prices. Scale your large batch queries with minimal friction and effectively deal with large-scale workloads to cut back processing time, with 24-hour goal turnaround, at as much as 50% much less value than international customary.

Greatest for: Giant-volume duties with versatile latency wants.

Typical use circumstances embody:

- Giant-scale knowledge processing and content material technology.

- Knowledge transformation pipelines.

- Mannequin analysis throughout in depth datasets.

Buyer in motion: Ontada

Ontada, a McKesson firm, used the Batch API to rework over 150 million oncology paperwork into structured insights. Making use of LLMs throughout 39 most cancers varieties, they unlocked 70% of beforehand inaccessible knowledge and reduce doc processing time by 75%. Be taught extra within the Ontada case examine.

Provisioned

The Provisioned mannequin offers devoted throughput by way of Provisioned Throughput Models (PTUs). This permits secure latency and excessive throughput—splendid for manufacturing use circumstances requiring real-time efficiency or processing at scale. Commitments might be hourly, month-to-month, or yearly with corresponding reductions.

Greatest for: Enterprise workloads with predictable demand and the necessity for constant efficiency.

Frequent use circumstances:

- Excessive-volume retrieval and doc processing situations.

- Name heart operations with predictable site visitors hours.

- Retail assistant with persistently excessive throughput.

Clients in motion: Visier and UBS

- Visier constructed “Vee,” a generative AI assistant that serves as much as 150,000 customers per hour. By utilizing PTUs, Visier improved response instances by 3 times in comparison with pay-as-you-go fashions and decreased compute prices at scale. Learn the case examine.

- UBS created ‘UBS Crimson’, a safe AI platform supporting 30,000 workers throughout areas. PTUs allowed the financial institution to ship dependable efficiency with region-specific deployments throughout Switzerland, Hong Kong, and Singapore. Learn the case examine.

Deployment varieties for traditional and provisioned

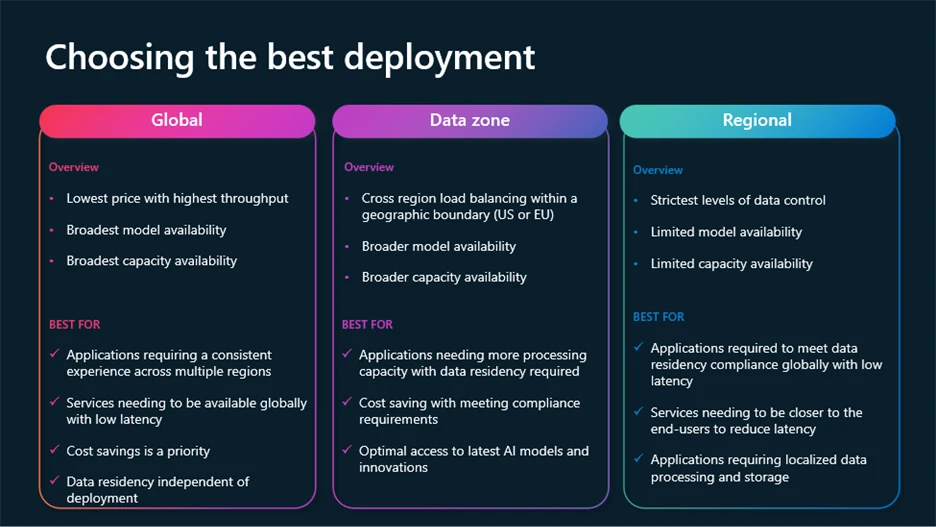

To fulfill rising necessities for management, compliance, and price optimization, Azure OpenAI helps a number of deployment varieties:

- World: Most cost-effective, routes requests by way of the worldwide Azure infrastructure, with knowledge residency at relaxation.

- Regional: Retains knowledge processing in a particular Azure area (28 accessible at the moment), with knowledge residency each at relaxation and processing within the chosen area.

- Knowledge Zones: Presents a center floor—processing stays inside geographic zones (E.U. or U.S.) for added compliance with out full regional value overhead.

World and Knowledge Zone deployments can be found throughout Customary, Provisioned, and Batch fashions.

Dynamic options enable you reduce prices whereas optimizing efficiency

A number of dynamic new options designed that can assist you get one of the best outcomes for decrease prices are actually accessible.

- Mannequin router for Azure AI Foundry: A deployable AI chat mannequin that robotically selects one of the best underlying chat mannequin to reply to a given immediate. Good for numerous use circumstances, mannequin router delivers excessive efficiency whereas saving on compute prices the place potential, all packaged as a single mannequin deployment.

- Batch giant scale workload help: Processes bulk requests with decrease prices. Effectively deal with large-scale workloads to cut back processing time, with 24-hour goal turnaround, at 50% much less value than international customary.

- Provisioned throughput dynamic spillover: Gives seamless overflowing on your high-performing functions on provisioned deployments. Handle site visitors bursts with out service disruption.

- Immediate caching: Constructed-in optimization for repeatable immediate patterns. It accelerates response instances, scales throughput, and helps reduce token prices considerably.

- Azure OpenAI monitoring dashboard: Repeatedly monitor efficiency, utilization, and reliability throughout your deployments.

To be taught extra about these options and the way to leverage the newest improvements in Azure AI Foundry fashions, watch this session from Construct 2025 on optimizing Gen AI functions at scale.

Past pricing and deployment flexibility, Azure OpenAI integrates with Microsoft Value Administration instruments to present groups visibility and management over their AI spend.

Capabilities embody:

- Actual-time value evaluation.

- Price range creation and alerts.

- Help for multi-cloud environments.

- Value allocation and chargeback by crew, venture, or division.

These instruments assist finance and engineering groups keep aligned—making it simpler to grasp utilization tendencies, monitor optimizations, and keep away from surprises.

Constructed-in integration with the Azure ecosystem

Azure OpenAI is an element of a bigger ecosystem that features:

This integration simplifies the end-to-end lifecycle of constructing, customizing, and managing AI options. You don’t must sew collectively separate platforms—and which means quicker time-to-value and fewer operational complications.

A trusted basis for enterprise AI

Microsoft is dedicated to enabling AI that’s safe, personal, and secure. That dedication exhibits up not simply in coverage, however in product:

- Safe future initiative: A complete security-by-design strategy.

- Accountable AI rules: Utilized throughout instruments, documentation, and deployment workflows.

- Enterprise-grade compliance: Overlaying knowledge residency, entry controls, and auditing.

Get began with Azure AI Foundry