Coming into the Serverless period

On this weblog, we share the journey of constructing a Serverless optimized Artifact Registry from the bottom up. The principle targets are to make sure container picture distribution each scales seamlessly below bursty Serverless site visitors and stays accessible below difficult eventualities similar to main dependency failures.

Containers are the fashionable cloud-native deployment format which characteristic isolation, portability and wealthy tooling eco-system. Databricks inside providers have been operating as containers since 2017. We deployed a mature and have wealthy open supply mission because the container registry. It labored nicely because the providers have been typically deployed at a managed tempo.

Quick ahead to 2021, when Databricks began to launch Serverless DBSQL and ModelServing merchandise, hundreds of thousands of VMs have been anticipated to be provisioned every day, and every VM would pull 10+ photos from the container registry. In contrast to different inside providers, Serverless picture pull site visitors is pushed by buyer utilization and might attain a a lot larger higher certain.

Determine 1 is a 1-week manufacturing site visitors load (e.g. clients launching new knowledge warehouses or MLServing endpoints) that reveals the Serverless Dataplane peak site visitors is greater than 100x in comparison with that of inside providers.

Based mostly on our stress checks, we concluded that the open supply container registry couldn’t meet the Serverless necessities.

Serverless challenges

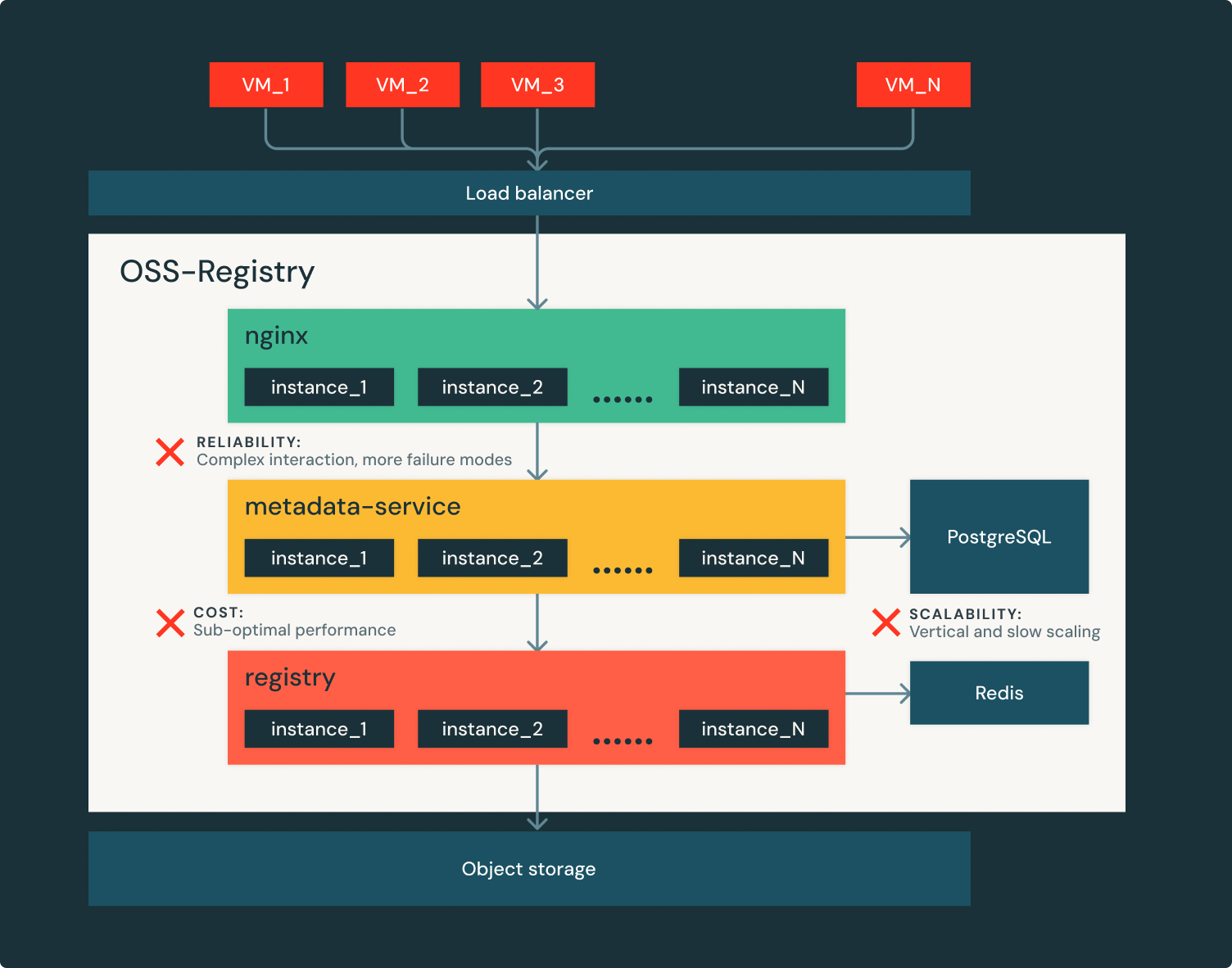

Determine 2 reveals the principle challenges of serving Serverless workloads with open supply container registry:

- Not sufficiently dependable: OSS registries typically have a posh structure and dependencies similar to relational databases, which usher in failure modes and enormous blast radius.

- Arduous to maintain up with Databricks’ development: within the open supply deployment, picture metadata is backed by vertically scaling relational databases and distant cache cases. Scaling up is sluggish, generally takes 10+ minutes. They are often overloaded as a consequence of under-provisioning or too costly to run when over-provisioned.

- Expensive to function: OSS registries are usually not efficiency optimized and have a tendency to have excessive useful resource utilization (CPU intensive). Working them at Databricks’ scale is prohibitively costly.

What about cloud managed container registries? They’re typically extra scalable and provide availability SLA. Nonetheless, completely different cloud supplier providers have completely different quotas, limitations, reliability, scalability and efficiency traits. Databricks operates in a number of clouds, we discovered the heterogeneity of clouds didn’t meet the necessities and was too expensive to function.

Peer-to-peer (P2P) picture distribution is one other widespread method to scale back the load to the registry, at a unique infrastructure layer. It primarily reduces the load to registry metadata however nonetheless topic to aforementioned reliability dangers. We later additionally launched the P2P layer to scale back the cloud storage egress throughput. At Databricks, we imagine that every layer must be optimized to ship reliability for your entire stack.

Introducing the Artifact Registry

We concluded that it was crucial to construct Serverless optimized registry to satisfy the necessities and guarantee we keep forward of Databricks’ fast development. We due to this fact constructed Artifact Registry – a homegrown multi-cloud container registry service. Artifact Registry is designed with the next ideas:

- The whole lot scales horizontally:

- Don’t use relational databases; as a substitute, the metadata was continued into cloud object storage (an current dependency for photos manifest and layers storage). Cloud object storages are far more scalable and have been nicely abstracted throughout clouds.

- Don’t use distant cache cases; the character of the service allowed us to cache successfully in-memory.

- Scaling up/down in seconds: added in depth caching for picture manifests and blob requests to scale back hitting the sluggish code path (registry). Consequently, just a few cases (provisioned in just a few seconds) should be added as a substitute of a whole lot.

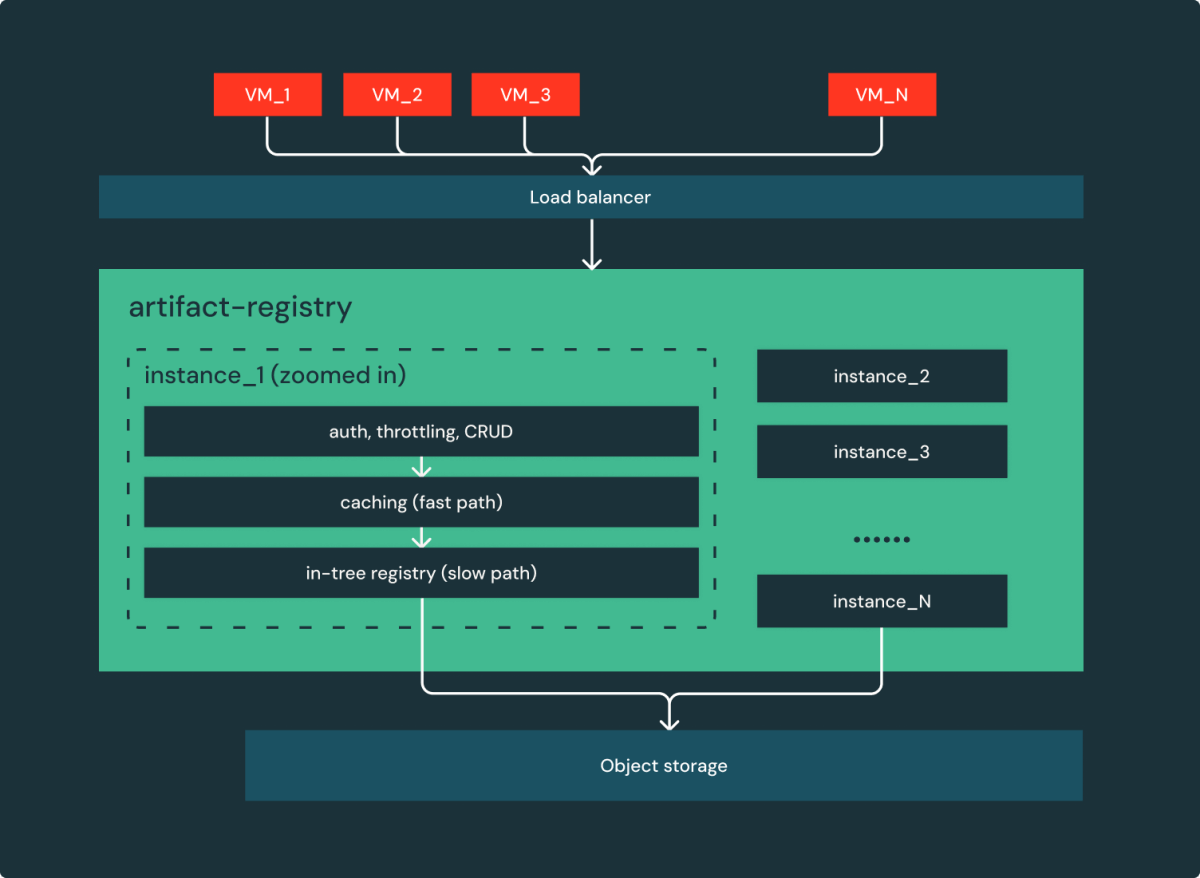

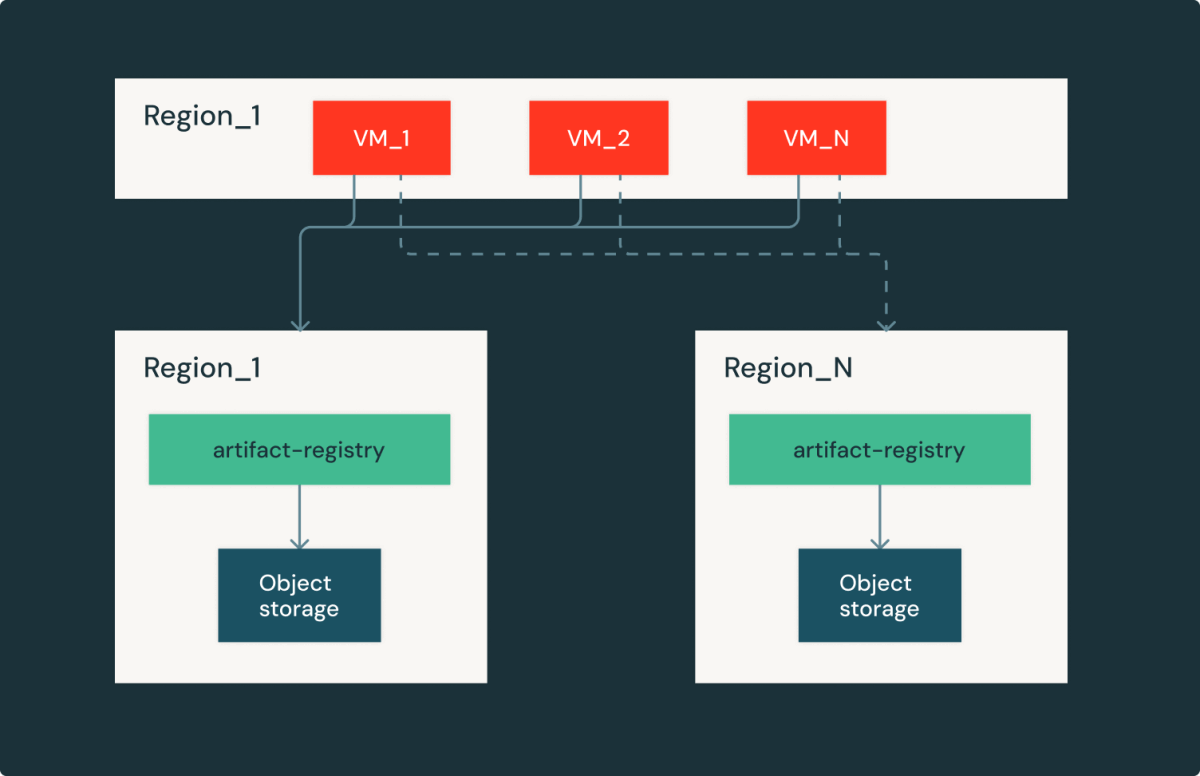

- Easy is dependable: not like OSS, registries are of a number of elements and dependencies, the Artifact Registry embraces minimalism. Behind the load balancer, As proven in Determine 3, there is just one element and one cloud dependency (object storage). Successfully, it’s a easy, stateless, horizontally scalable internet service.

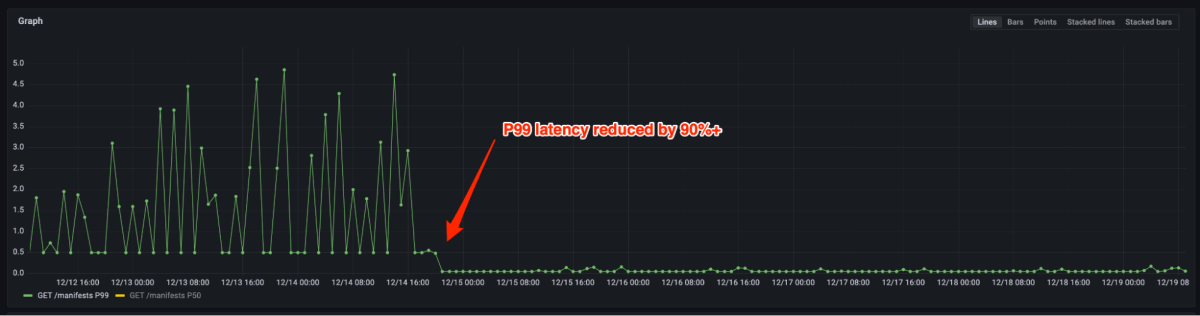

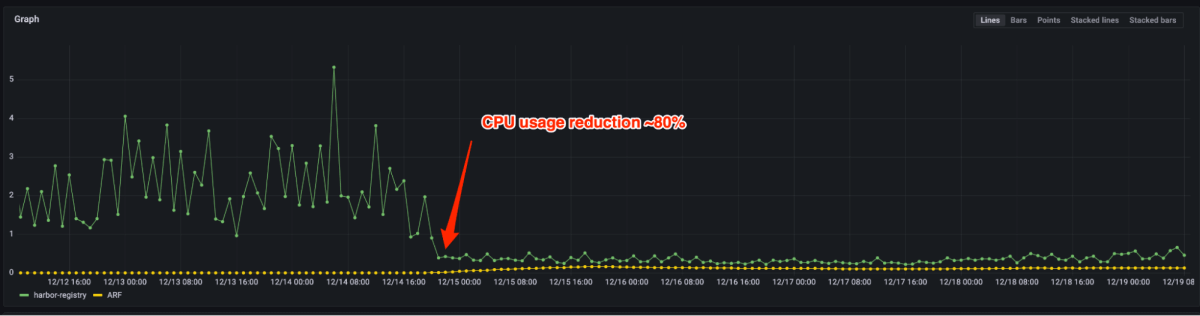

Determine 4 and 5 present that P99 latency decreased by 90%+ and CPU utilization decreased by 80% after migrating from the open supply registry to Artifact Registry. Now we solely have to provision just a few cases for a similar load vs. hundreds beforehand. In actual fact, dealing with manufacturing peak site visitors doesn’t require scale out typically. In case auto-scaling is triggered, it may be executed in just a few seconds.

Surviving cloud object storages outage

With all of the reliability enhancements talked about above, there may be nonetheless a failure mode that sometimes occurs: cloud object storage outages. Cloud object storages are typically very dependable and scalable; nonetheless, when they’re unavailable (generally for hours), it doubtlessly causes regional outages. At Databricks, we attempt onerous to make cloud dependencies failures as clear as attainable.

Artifact Registry is a regional service, an occasion in every cloud/area has an equivalent reproduction. In case of regional storage outages, the picture shoppers are capable of fail over to completely different areas with the tradeoff on picture obtain latency and egress price. By fastidiously curating latency and capability, we have been capable of shortly get better from cloud supplier outages and proceed serving Databricks’ clients.

Conclusions

On this weblog submit, we shared our journey of scaling container registries from serving low churn inside site visitors to buyer dealing with bursty Serverless workloads. We purpose-built Serverless optimized Artifact Registry. In comparison with the open supply registry, it decreased P99 latency by 90% and useful resource usages by 80%. To additional enhance reliability, we made the system to tolerate regional cloud supplier outages. We additionally migrated all the present non-Serverless container registries use circumstances to the Artifact Registry. In the present day, Artifact Registry continues to be a strong basis that makes reliability, scalability and effectivity seamless amid Databricks’ fast development.

Acknowledgement

Constructing dependable and scalable Serverless infrastructure is a staff effort from our main contributors: Robert Landlord, Tian Ouyang, Jin Dong, and Siddharth Gupta. The weblog can also be a staff work – we admire the insightful critiques offered by Xinyang Ge and Rohit Jnagal.