Snowflake at present introduced the general public preview of Snowpark Join for Apache Spark, a brand new providing that permits clients to run their present Apache Spark code straight on the Snowflake cloud. The transfer brings Snowflake nearer to what’s provided by its essential rival, Databricks.

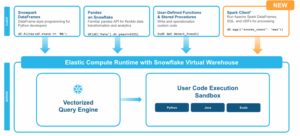

Snowpark Join for Apache Spark lets clients run their DataFrame, Spark SQL, and Spark person outlined operate (UDF) code on Snowflake’s vectorized question engine. This code might be related to a variety of present Spark purposes, together with ETL jobs, information science applications written utilizing Jupyter notebooks, or OLAP jobs that use Spark SQL.

With Snowpark Join for Apache Spark, Snowflake says it handles all of the efficiency tuning and scaling of the Spark code mechanically, thereby liberating clients to deal with creating purposes fairly than managing the technically complicated distributed framework beneath them.

“Snowpark Join for Spark delivers the most effective of each worlds: the ability of Snowflake’s engine and the familiarity of Spark code, all whereas reducing prices and accelerating improvement,” write Snowflake product managers Nimesh Bhagat and Shruti Anand in a weblog submit at present.

Snowpark Join for Spark relies on Spark Join, an Apache Spark mission that debuted in 2022 and went GA with Spark model 3.4. Spark Join launched a brand new protocol, primarily based on gRPC and Apache Arrow, that permits distant connectivity to Spark clusters utilizing the DataFrame API. Primarily, it permits Spark purposes to be damaged up into shopper and server parts, ending the monolithic structured that Spark had used up till then.

Snowpark Join for Apache Spark lets Snowflake clients run Spark workloads with out modification (Picture courtesy Snowflake)

This isn’t the primary time Snowflake has enabled clients to run Spark code on its cloud. It has provided the Spark Connector, which lets clients use Spark code to course of Snowflake information. “[B]ut this launched information motion, leading to further prices, latency and governance complexity,” Bhagat and Anand write.

Whereas efficiency improved with transferring Spark to Snowflake, it nonetheless typically meant rewriting code, together with to Snowpark DataFrames, Snowflake says. With the rollout of Snowpark Join for Apache Spark, clients can now use their Spark code however with out changing code or transferring information, the product managers write.

Clients can entry information saved in Apache Iceberg tables with their Snowpark Join for Apache Spark purposes, together with externally managed Iceberg tables and catalog-linked tables, the corporate says. The providing runs on Spark 3.5.x solely; Spark RDD, Spark ML, MLlib, Streaming and Delta APIs will not be at the moment a part of Snowpark Join’s supported options, the corporate says.

The launch exhibits Snowflake is raring to tackle Databricks and its substantial base of Spark workloads. Databricks was based by the creators of Apache Spark, and constructed its cloud to be the most effective place to run Spark workloads. Snowflake, however, initially marketed itself because the easy-to-use cloud for patrons who have been pissed off with the technical complexity of Hadoop-era platforms.

Whereas Databricks acquired its begin with Spark, it has widened its choices significantly over time, and now it’s gearing as much as be a spot to run AI workloads. Snowflake can be eyeing AI workloads, along with Spark large information jobs.

Associated Gadgets:

It’s Snowflake Vs. Databricks in Dueling Huge Knowledge Conferences

From Monolith to Microservices: The Way forward for Apache Spark

Databricks Versus Snowflake: Evaluating Knowledge Giants